Future versions of Apple’s Siri could go beyond voice recognition.

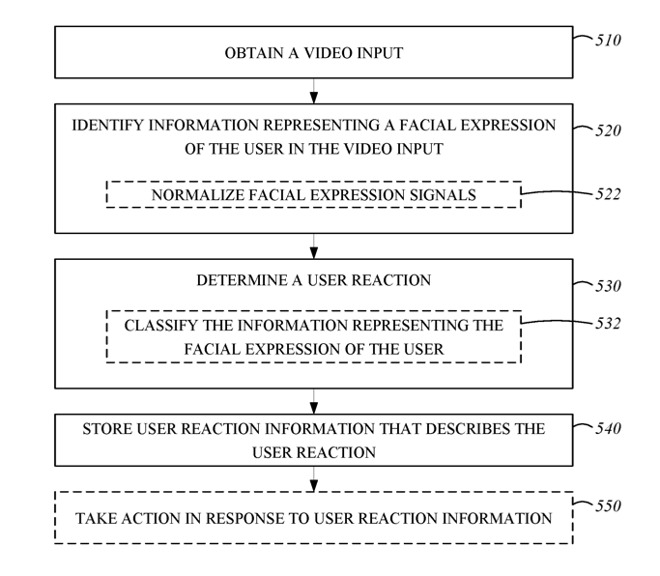

Apple is developing a way to help interpret a user’s requests by adding facial analysis to a future version of Siri or another system. The goal is to reduce the number of times a voice request is misinterpreted and to do so by trying to analyze emotions.

Voice assistants can perform actions on behalf of a user,” says Apple in US patent number 20190348037. “Actions can be performed in response to user input in natural language, such as a sentence pronounced by the user. In some circumstances, an action taken by an intelligent software agent may not match the action the user intended. “

“For example,” he continues, “the image of the face in the video input can be analyzed to determine if certain muscles or muscle groups are activated by identifying forms or movements.”

Part of the system involves the use of facial recognition to identify the user and then provide customized actions such as retrieving that person’s email or playing personal music playlists.

It is also intended, however, to read a user’s emotional state.

“[The] user reaction information is expressed as one or more metrics as a probability that the user’s reaction matches a certain status as positive or negative,” the patent continues “or a degree where the user is expressing the reaction “.

This could help in a situation where the spoken command can be interpreted in different ways. In that case, Siri could calculate the most likely meaning and act on it , then use facial recognition to see if the user is satisfied or not.

The system works “by obtaining, through a microphone, an audio input and obtaining, through a camera, one or more images “. Apple notes that expressions can have different meanings , but its method classifies the range of possible meanings according to the Facial Action Coding System (FACS).

This is a standard for facial taxonomy, created for the first time in the 1970s, which classifies every possible facial expression into a large reference catalog.

Using FACS, Apple’s system assigns scores to determine the probability of correct interpretation and then to make Siri react or respond accordingly.

Recent Comments